Featured faculty: DJ Corey

Senior Lecturer & EMT Program Director

Bouvé College of Health Sciences

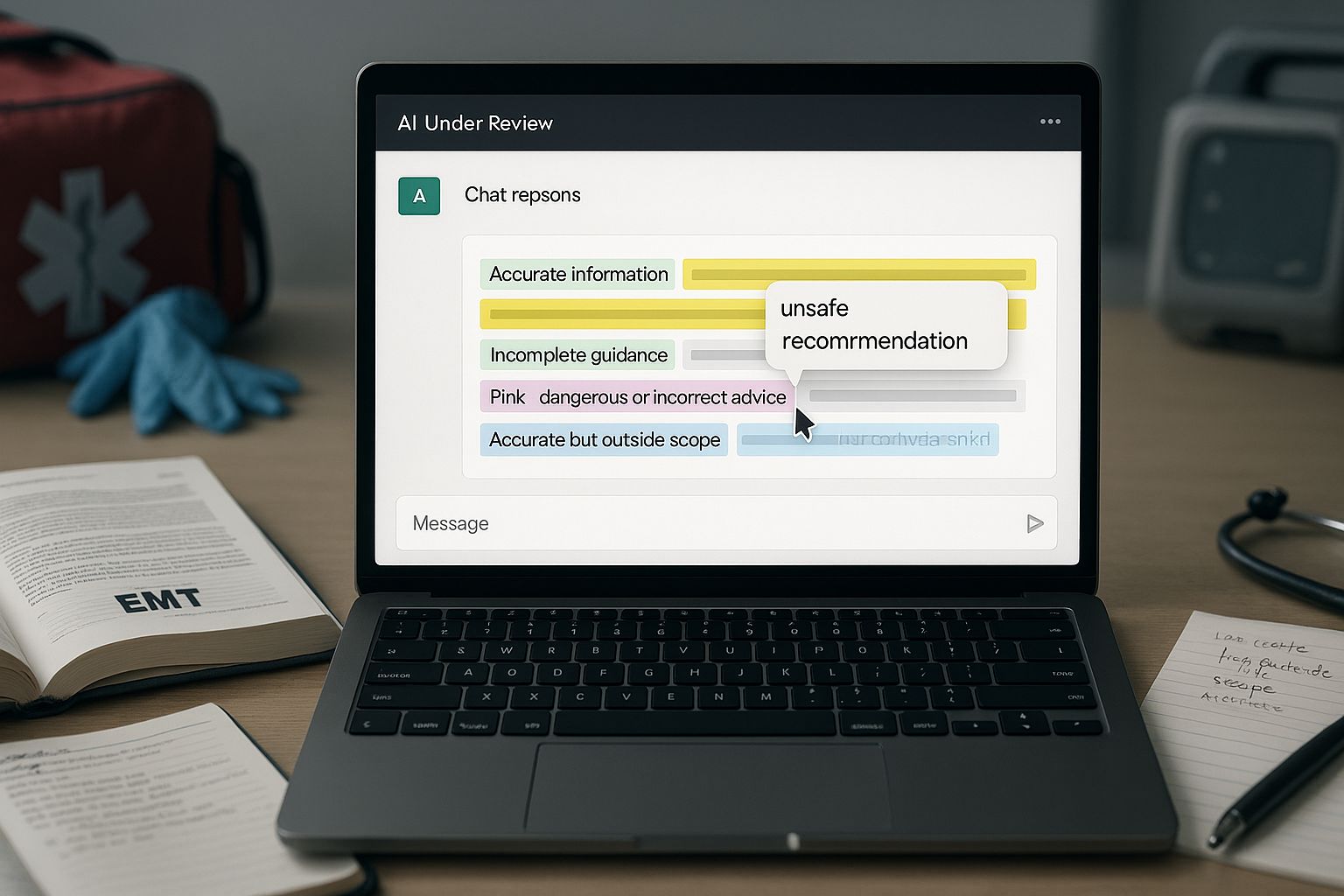

What he’s doing: DJ has students evaluate AI-generated medical advice using a color-coding system in his EMT training courses. After picking an illness or injury, students then independently draft a realistic case study of a patient experiencing that condition in an emergency medical situation. They then prompt any AI tool to manage the case as a Massachusetts EMT and systematically analyze the AI's response using four colors: green for accurate information, yellow for incomplete guidance, pink for dangerous recommendations, and blue for medically sound advice outside of an EMT’s scope. Students are required to explain their color-coding as part of the assignment and then give and defend a grade of the AI tool’s ability to respond to the scenario correctly.

This assignment transfers readily to other contexts. The use scenario above is medical, but we can also imagine students color-coding AI-generated legal briefs, engineering solutions, or historical analyses for accuracy, completeness, and domain-appropriate application. |

What's working: The assignment achieves dual learning objectives—supporting clinical decision-making while developing critical AI literacy. DJ describes this as thinking “backward” from the symptoms to a scenario and physiology that would cause it. Students then must think “forward” to evaluating the treatment recommendations that AI generates in response to that scenario.

The color-coding framework forces engagement with every line of AI output, preventing passive acceptance of generated content. Students consistently report this as their first assignment requiring them to use AI while simultaneously revealing its limitations. The structured critique process has improved overall student performance, with learners increasingly using AI as a study tool rather than a replacement for thinking. The requirement to grade the AI promotes metacognitive reflection about appropriate AI use in healthcare contexts.

What's next: This assignment evolved from a one-off experiment into a three-part series covering medical emergencies, behavioral crises, and trauma cases. Spring 2026 will see the integration of virtual reality simulations to expand case-based learning opportunities. VR will enable scenarios difficult to replicate with current resources, allowing students to practice decision-making in more diverse emergency situations. The combination of AI evaluation skills and immersive VR experiences aims to develop healthcare providers who can critically assess technological tools while maintaining focus on patient safety and scope-appropriate care.